The Effects of AI on Attribution and Trust in Communication

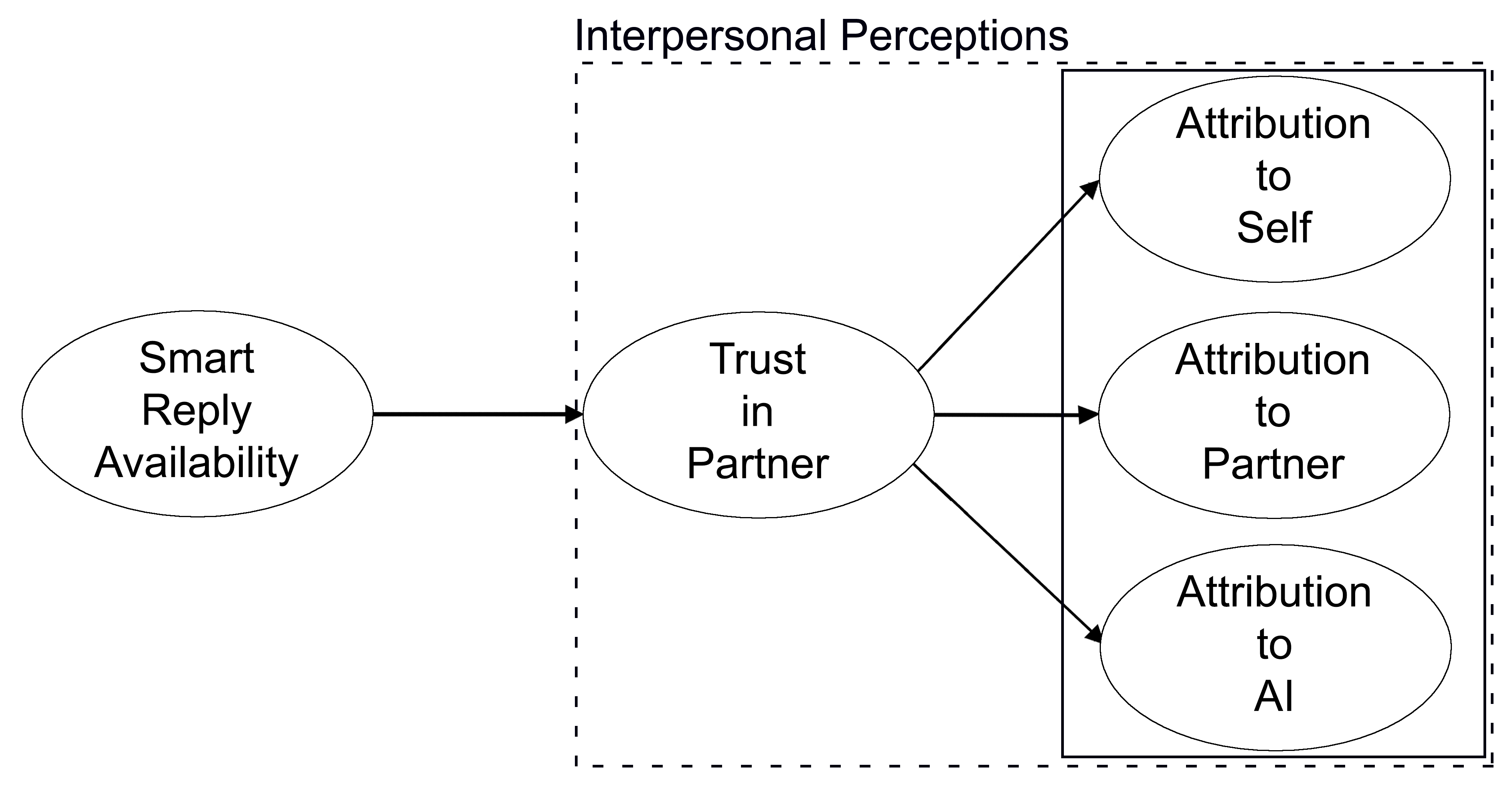

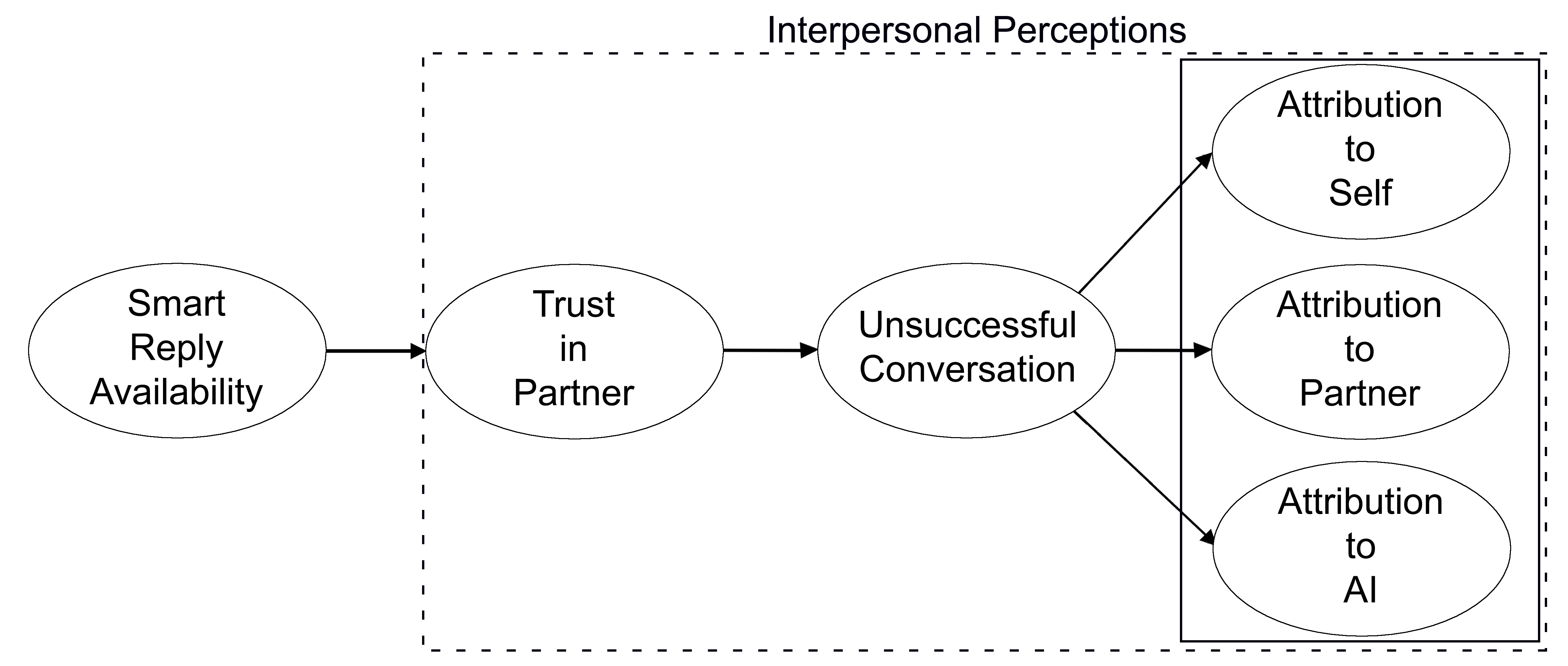

This study is motivated by previous work suggesting that the presence of AI is affecting communication in unspecified ways and that when humans collaborate with intelligent systems, misattribution is common and problematic. We situate these ideas within the relevant theories regarding attribution and trust to determine how AI could affect interpersonal dynamics.

Methodology

In this study, we proposed trust and attribution as outcome variables, respectively, with the presence of AI-generated smart replies as the independent variable

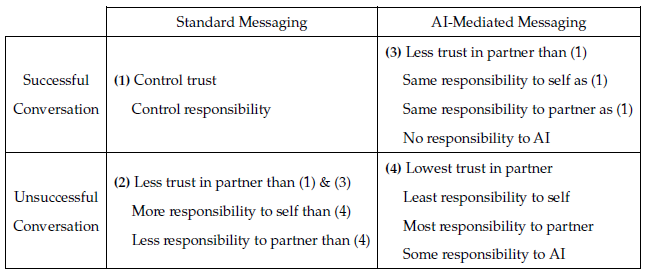

• Conditions 1–2 functioned as control conditions that were used to compare with the results from the experimental AI-mediated messaging conditions, 3–4. Our hypotheses for each condition, which were made in accordance with the reviewed literature, are also shown in the table.

• The name associated with the confederate account was changed each day to a name pulled from a large online list of gender-neutral baby names

• Initial small talk between the participant and confederate served to familiarize participants with the messaging application and build trust between participants and the confederate

• Participants worked with the confederate to complete the lifeboat task

Conversations and smart replies were transcribed and analyzed using Linguistic Inquiry and Word Count (LIWC), a dictionary-based text analysis tool that determines the percentage of words that reflect a number of linguistic processes, psychological processes, and personal concerns.

• After examining the confederate’s linguistic consistency within each condition, 15 conversations were excluded from the analysi.

• All participants passed a manipulation check, confirming that our methodological choices in crafting (un-)successful conversations were sound

Main Findings

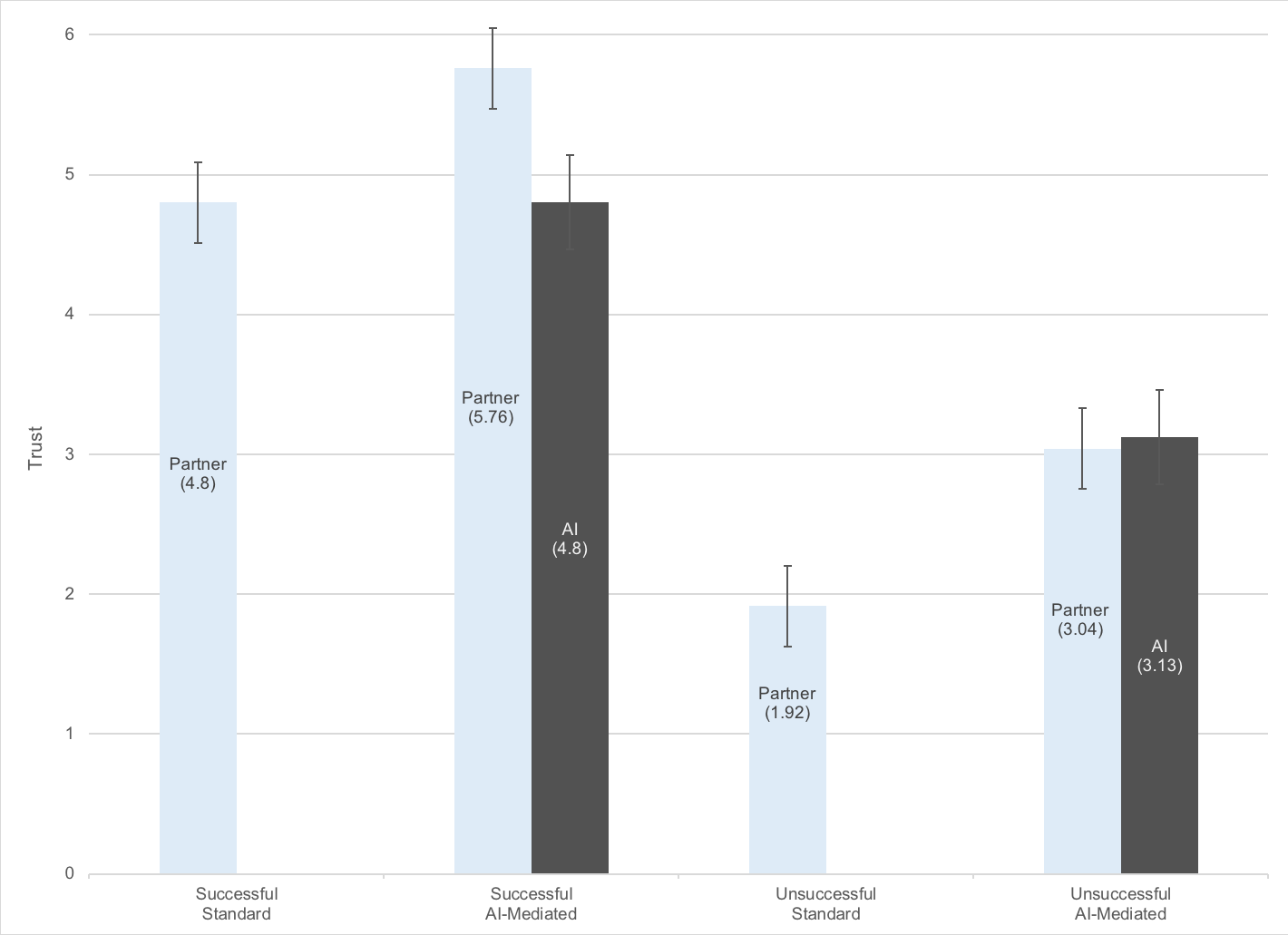

Perceptions of trust were affected by the presence of smart

replies, regardless of whether the interaction was successful or not.

• AI in communication increases increase trust in the human partner

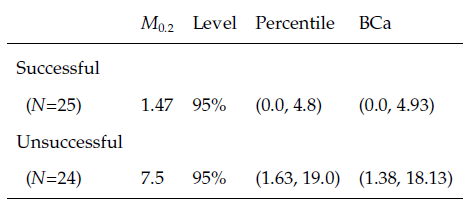

• This suggests that trust may only be a weak or non-existent mediating factor for attribution when interactions are successful

• AI could act as a scapegoat to take on some of the responsibility for the team’s failure

• When things go wrong, AI is granted participant status and perceived as affecting the conversation outcome

Recommendations

AI is granted agency when things go wrong in communication and could be used to

to resolve team conflict and improve communication outcomes

AI seems to be granted agency by users only when conversations go awry, so it cannot be assumed that AI has agency in everyday, successful conversation